Applying the robust internal feature selection of artificial intelligence (AI) and the abstraction powering deep learning to Earth observation data can revolutionize the detection of marine debris and facilitate the on-going efforts to clean the world’s oceans. This strategic combination can greatly inform targeted monitoring and cleanup efforts, as well as contribute to scientific research on marine debris transport dynamics.

Current approaches to detecting marine debris rely mostly on lower resolution satellite imagery (which is very coarse) or aerial data (which is expensive to acquire). However, the high spatio-temporal resolution and broad coverage of small satellite imagery make it uniquely advantageous for the dynamics of marine debris monitoring, where targets are influenced by many shifting variables such as currents, weather, and human activities.

In addition, the spatial resolution of small satellite imagery is high in comparison to imagery from most open access satellites. This difference of spatial resolution may confer further improvements with respect to the granularity, or scale, of feasibly detectable ocean plastics.

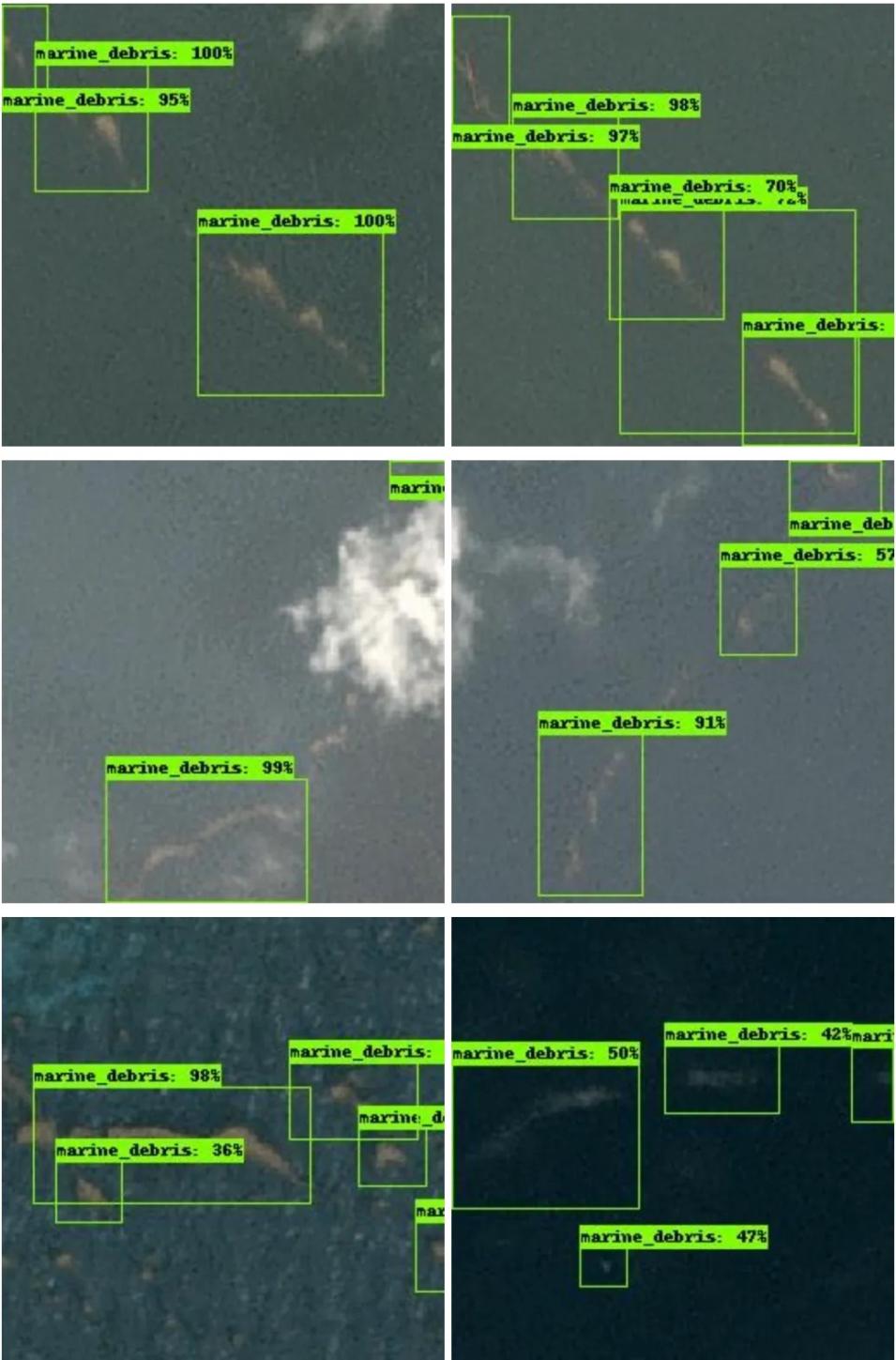

The IMPACT team first conducted an extensive literature review of marine debris and plastic detection to colocate validated marine debris events. These locations and the respective timestamps of validated marine debris observations served as the synoptic reference for where to search for overlapping imagery. The observations they sourced were mainly from observations around the Bay Islands in Honduras, augmented with observations from Accra, Ghana and Mytilene, Greece (Kikaki et al. 2020, Biermann et al. 2020, Topouzelis et al. 2019).

Next, they searched the 3-meter Planet imagery archive for the corresponding dates from the literature and manually verified the presence of marine debris for creating the labeled dataset. The combination of moderately high spatial resolution, high temporal resolution, availability of a near-infrared channel (where plastic reflects strongly in the electromagnetic spectrum) and global coverage of coastlines made this imagery advantageous for the purposes of this research.

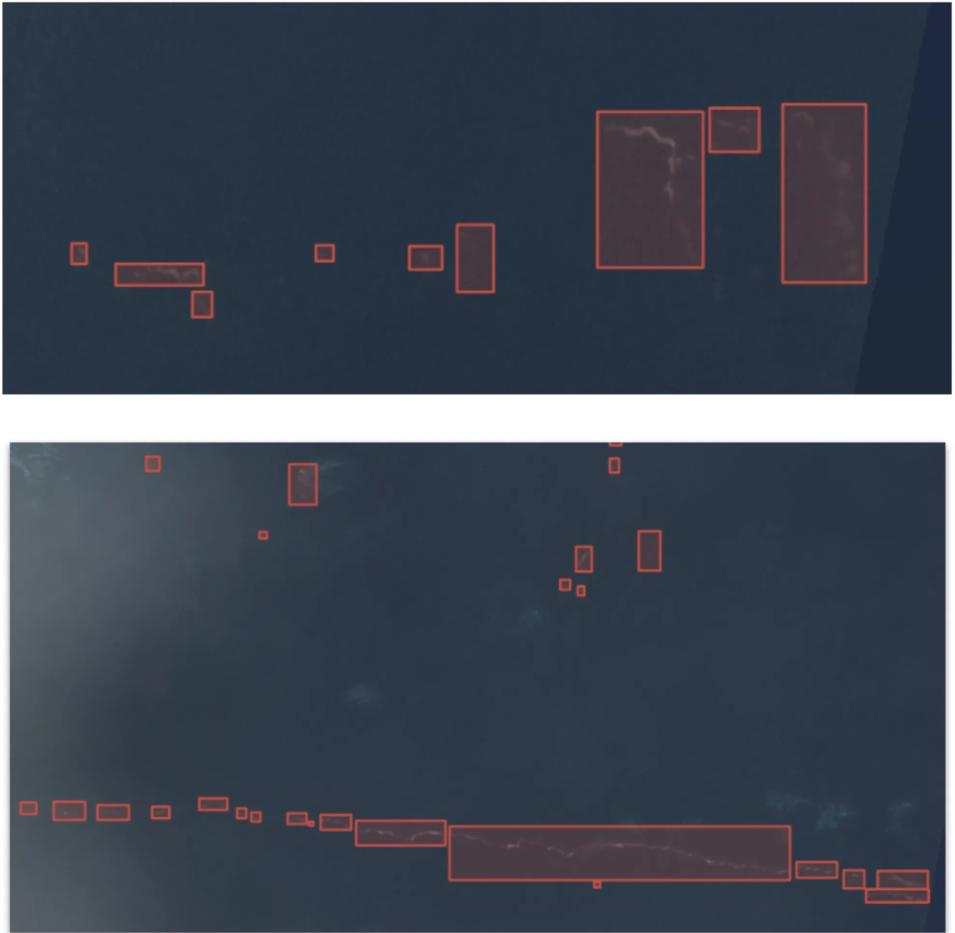

After having identified an imagery dataset, the next step was to take inventory of the feasibly detectable features as constrained by the specifications of the imagery. With Planetscope, the team anticipated the model would be capable of detecting aggregated debris flotsam as well as some mega plastics including medium to large size ghost (i.e. lost or abandoned) fishing nets. Eventually, the custom labeled dataset consisted of 1370 polygons containing marine debris. The labeled portion of each Planetscope scene was divided into smaller square (256 x 256) tiles which were used for training an object detection model.