In a recent IMPACT blog article, Kaylin Bugbee, lead of the Science Discovery Engine project, and Rahul Ramachandran, IMPACT project manager, explored the ethical implications of using generative large language models (LLMs) in the context of scientific documentation. These machine learning models are examples of foundation models which rely on neural network learning algorithms to analyze massive quantities of text data. After substantial training, LLMs are capable of diverse tasks, including language prediction and novel text generation.

In their article, Kaylin and Rahul discussed how the proliferation of LLMs has recently given rise to applications such as OpenAI’s GPT-3 and Google’s BERT (Bidirectional Encoder Representations from Transformers), and they considered how these tools may influence how scientific output is accessed and legitimized. Given that IMPACT is beginning a partnership with IBM to build AI foundation models for several Earth data analysis projects, the fundamental question of how LLM development and use intersects with open science principles is worthy of thorough examination. IMPACT recently capitalized on the opportunity to jointly delve into this topic with a group visiting from IBM research through a moderated, informal lunch-time discussion regarding open science and LLMs.

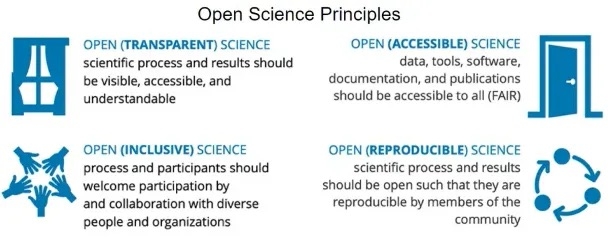

Kaylin opened the exchange by sharing how both NASA and IMPACT view the definition and core tenets of open science. She explained:

"Open science is a collaborative culture enabled by technology that empowers the open sharing of data, information, and knowledge within the scientific community and the wider public to accelerate scientific research and understanding."

She also acknowledged that there are many different definitions and interpretations of open science, but over time, NASA has reviewed and distilled these variations into a relevant framework for its own use.

IBM researchers Priya Nagpurkar and Carlos Costa agreed that these open science principles “resonated” with them and they saw value in an “open source approach.” Priya noted that it’s important to be “intentional” with an open source methodology, defining what achievements are desired, how broadly information may be used, and if others will adopt and contribute back to the “ecosystem.”

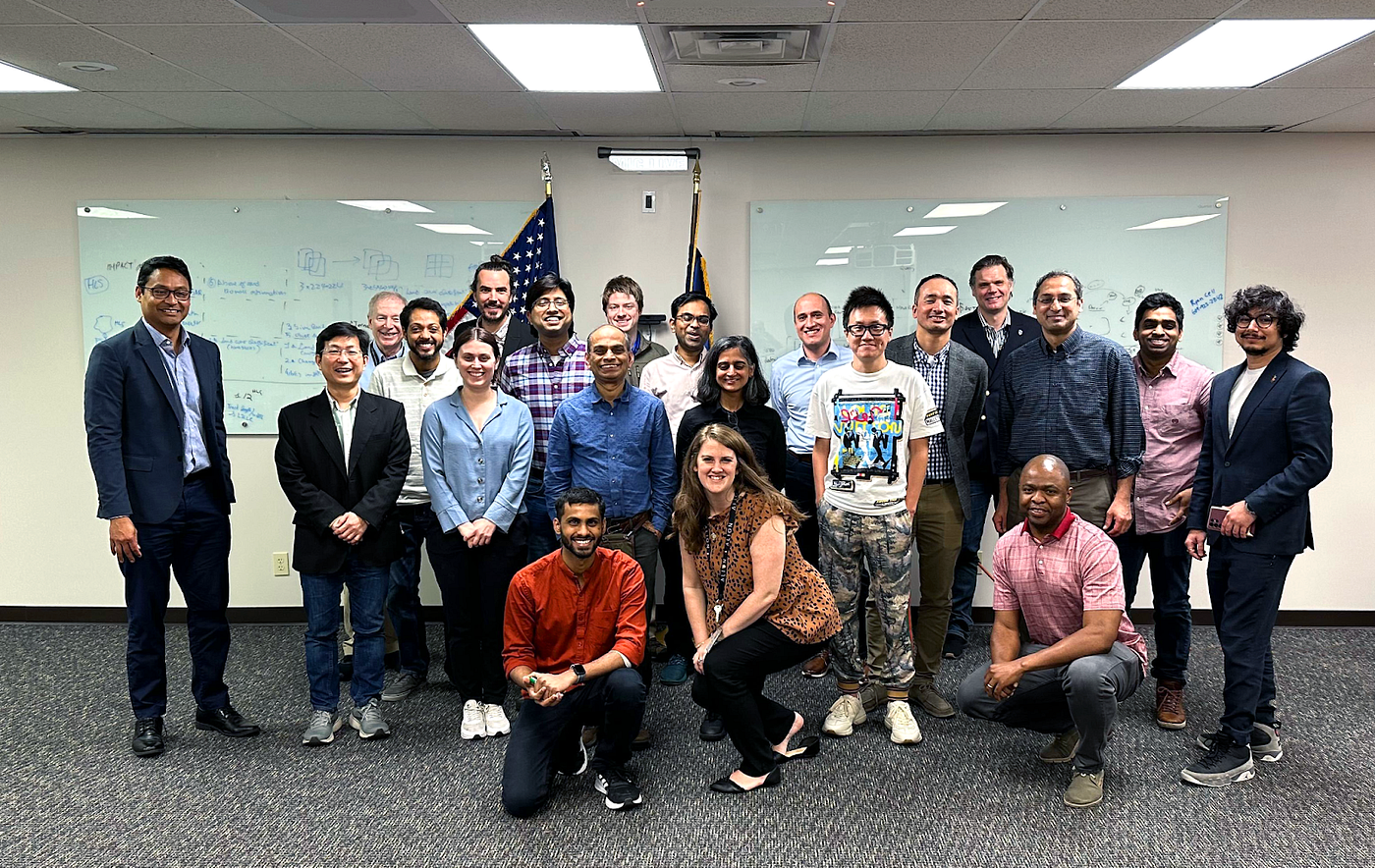

The group of about 20 participants from NASA/IMPACT, NASA’s High End Computing (HEC) Program, the U.S. Department of Energy’s (DOE) Oak Ridge National Laboratory (ORNL), and IBM Research shared their thoughts regarding several prompts prepared by Kaylin and Rahul. The questions were designed to generate specific discussions about how LLMs may be involved in and influence scientific practices and open science initiatives.

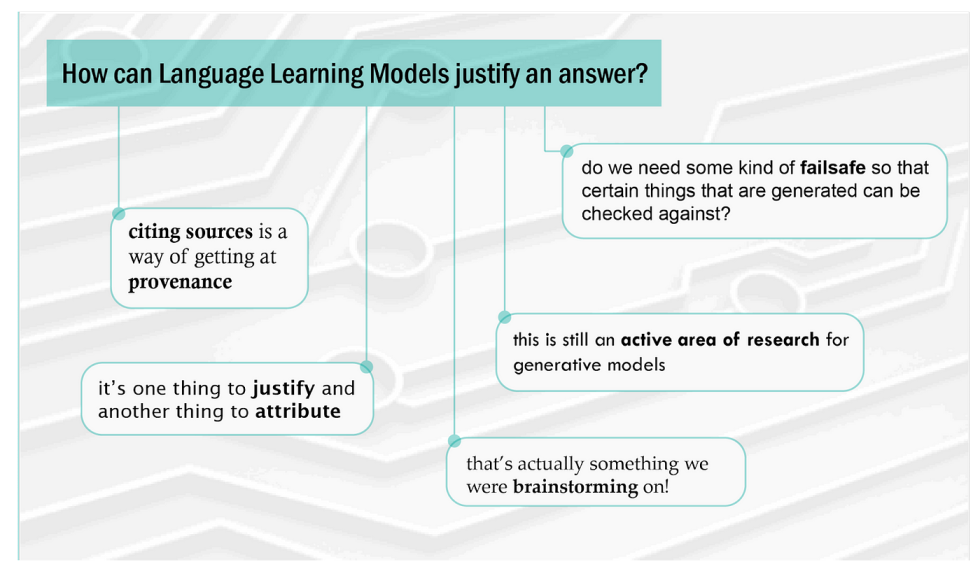

How can LLMs justify an answer?

For people to be convinced that they “know” something, they must believe and accept that it is true and be able to justify this truth. In science, justification plays a crucial role in qualifying the veracity of data and conclusions. Much of the discussion about this question centered on the notion of provenance, meaning the “place of origin” of an item or idea. It was noted that science is built on a culture of attribution and credit, so citations naturally provide a means of establishing conceptual provenance and justifying answers. Because LLMs are specifically designed for text processing, though, they cannot provide insight into the quality of scientific research processes such as experimental design and data collection techniques. Some participants agreed that the scope of the question may be too narrow because LLMs can only develop outputs based on access to scientific literature.

As an example of the perils associated with using LLMs to generate content, Kaylin described a recent chatGPT “horror story” in which a user prompted the tool to produce a biography about a fictional scientist. The LLM complied with the request, writing authoritative-sounding paragraphs about an entirely fabricated person. This anecdote stresses the importance of establishing guardrails for LLM output and ensuring that verifiable facts are the basis of generated results.

Many IBM and IMPACT representatives offered additional comments and posed forward-thinking questions, some of which are featured below.

How does a LLM know what truth is, especially when science evolves?

The discussion around this question began somewhat jokingly with the comment that “truth is relative,” but several participants emphasized how ephemeral truth may be in various contexts. For instance, LLMs trained on time-restricted information will, by consequence, be similarly restricted in their outputs. One participant pointed out that, if queried about pandemics, a model trained exclusively on information collected prior to 2020 would not even mention COVID-19.

The fundamental nature of science as an exploratory practice ensures that the knowledge that constitutes “truth” will inevitably change as new insights and discoveries are gleaned from further experimentation. Science is “self-correcting,” meaning that truth can be refined through an evolving understanding of phenomena.

Multiple people noted that there are potential ways to prime LLMs to be better truth-seekers. According to one participant, models come equipped with datasheets, which are designed to be “dispassionate statements of facts.” These datasheets can serve as reliable, factual references that provide information about data sources. Associating model output with transparent knowledge about data curation lends credibility to generative LLMs. Creating profiles for the data used to train these models would also highlight the formative characteristics of datasets. Pre-processing data can also help remove noise and other issues such as bias in the data to minimize issues with model output. Ultimately, providing as much information as possible about the the facets, distribution, and sources of data used to train models was agreed upon as the best path forward to temper expectations about the truthfulness of LLM output.

Is it possible for LLMs to give attribution for scientific works where appropriate?

When Kaylin posed this question to the group, IBM Research representative Avi Sil quickly answered “yes.” Many participants agreed that technological approaches already exist that can be applied to this problem, especially through the use of grounding. Grounded models are designed to access real-time, available information that can be used to inform model output. Based on a user query, an LLM could be used to retrieve a document, search for relevant text, and extract particular sections that can be accurately attributed to the author(s) or generate a response based on the extracted sections. Constructing applications with this capability is essentially an engineering problem, but it is both a possible and probable next step in improving LLM usability.

Is there a future for LLMs to estimate the probability of a scientific paper being correct?

This question prompted a conversation about how humans might assess the accuracy of a scientific paper versus how LLMs might approach the same task. When reviewing a publication, people might be inclined to place value on an author’s reputation and notoriety and at what professional conferences the findings were presented. Ranking highly in these characteristics might indicate a significant probability that a paper contains trustworthy results and carefully stated conclusions. However, even if findings are shared at well-known conferences, which typically indicates that a presenter is held in high regard by the scientific community, there is no guarantee that the research itself will ultimately be deemed informative or even fundamentally correct. One participant commented that some of the “best results” are not always featured at “top conferences.”

LLMs might improve on human endeavors by judging papers based on a wider, less biased set of characteristics. An IBM researcher commented that LLMs could be trained to skew results towards sources that are deemed “more trustworthy” within a particular discipline and could assess if a paper contradicts previously published research on the same topic. Kaylin agreed that “contradictory results are not always valued or prioritized” as they should be. Because the number of journals and volume of published papers continues to increase, it is becoming more difficult to discern the quality and reliability of the science conducted. Another participant agreed, adding that “LLMs would do a better job than us of sorting through results.”

Still, humans play a crucial role as gatekeepers to scientific repute. Subject matter expert review of draft papers is a necessary step in determining whether or not content is worthy of publication. Establishing open access to peer-reviewed papers and datasets must also become more standardized to allow LLMs to tap into the broadest possible collection of training data.

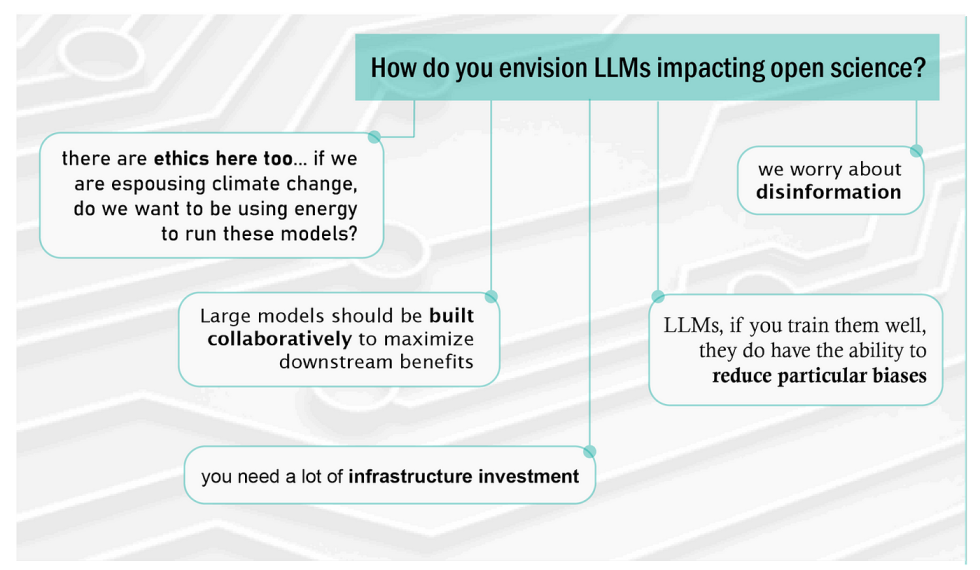

How do you envision LLMs impacting open science?

The primary considerations that emerged from responses to this question were ownership and cost. As the corpus of scientific work becomes more accessible, researchers will want to harness the power promised by LLMs. But who is going to develop and host these models? Will the models themselves be openly available, or will they have proprietary characteristics? Can models be built to scale or specialized for certain tasks or domains? And who will ultimately bear the energy costs associated with LLMs? These and other questions went unanswered, but articulating these concerns became a way of identifying the major potential issues and risks of incorporating LLMs into open science practices.

Many participants continued to voice optimism about the capabilities of LLMs and the opportunities they engender for crafting collaborative approaches to addressing global problems like climate change. Pontus Olofsson, who serves as the Satellite Needs Working Group (SNWG) Project Scientist, advocated for open access to LLMs developed for science because the sooner we “let people play around” with the models, the faster the scientific community can assess their performance and suggest how to fine-tune them for greater efficacy.

Some specific comments voiced by several participants are illustrated below.

While no grand solutions were drafted in this meeting, the questions presented by IMPACT team members prompted a high-level discourse that generated several key takeaway ideas about how LLMs may be used to enhance the openness of science in the future. Overall, stakeholders in the scientific and industrial communities are hopeful that intentional partnership and collaboration will mobilize the burgeoning potential of using LLMs to inspire novel, effective proposals for accomplishing large computational tasks like modeling climate change and sequencing DNA. As LLMs become an increasingly attractive option to deploy on accessible datasets (and as data access itself is bolstered by open science practices), important questions about what organizations will own, host, and fund models must be answered.

On a more functional level, there are specific steps that should be taken to optimize the availability and quality of LLM training data. Anyone involved in scientific data aggregation, processing, and publication will need to consider how to make information more broadly accessible and usable. Standardization will need to be a crucial component of these efforts. The potential downstream benefits of applying LLMs to datasets are enticing, but model accuracy and provenance should be prioritized, especially in science-based applications. Detailed datasheets should be paired with models to provide users with as much information as possible in assessing and interpreting model output.

Joint discussions like this one between science domain experts, machine learning researchers and industry developers are fruitful ways of strategizing the successful implementation of LLMs for science. These conversations should continue in an effort to maximize the potential and mitigate the risks of LLMs and their implications for the future of open science.

More information about IMPACT can be found on the NASA Earthdata and IMPACT project websites.

Meeting participants included:

IBM Research representatives

Bishwaranjan Bhattacharjee, Avi Sil, Raghu Ganti, Campbell Watson, Linsong Chu, Nam Nguyen, Carlos Costa, Maya Murad, Priya Nagpurkar, Shyam Ramji, Kate Blair, Kommy Weldemariam, Bianca Zadrozny, Talia Gershon, Ranjini Bangalore

IMPACT Team Members

Rahul Ramachandran, Manil Maskey, Kaylin Bugbee, Iksha Gurung, Sujit Roy, Chris Phillips, Brian Freitag, Pontus Olofsson, Hamed Alemohammad, and Muthukumaran (Kumar) Ramasubramanian

University of Alabama in Huntsville (UAH) researchers

Ankur Kumar and Udaysankar Nair

NASA representatives

Mike Little, Andrew Mitchell, Dan Duffy, Tsengdar Lee, Mark Carroll, Benjamin Smith, Laura Rogers, Oza Nikunj, Robert Morris, Jon Ranson, Jacqueline Le Moigne, Cerese Albers

DOE/ORNL representatives

Dalton Lunga and Philipe Diaz

View LinkedIn profiles for Kaylin Bugbee and Rahul Ramachandran.