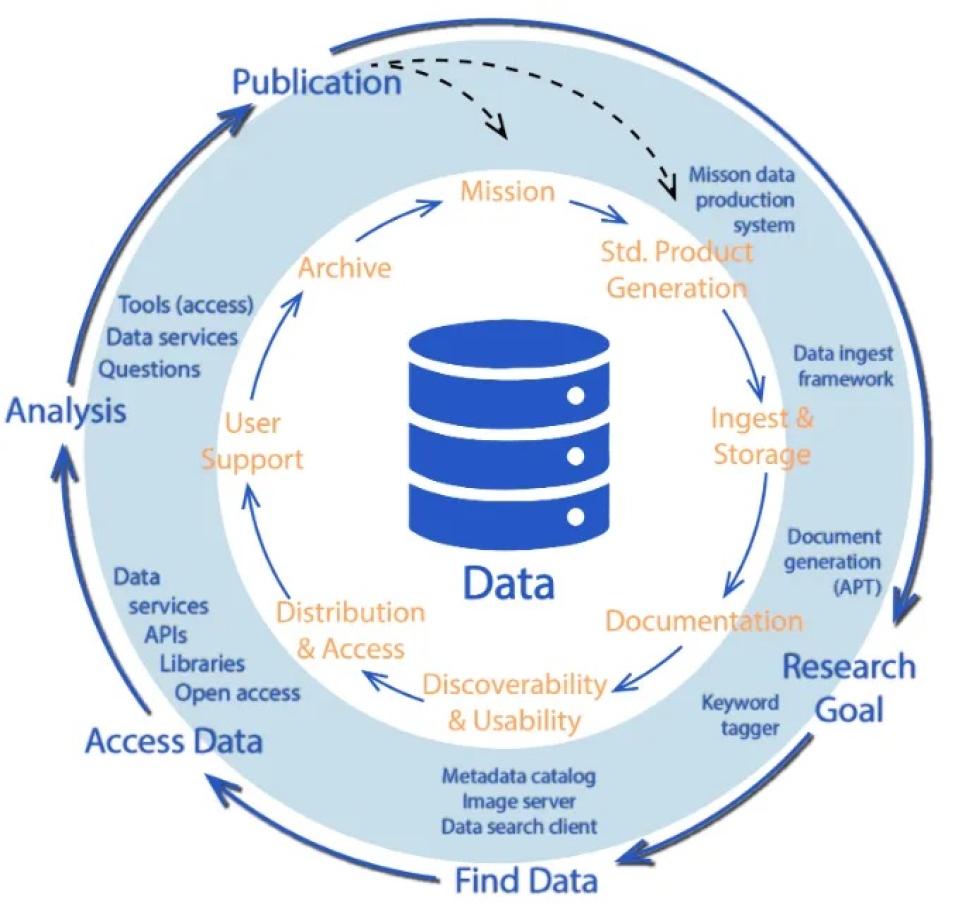

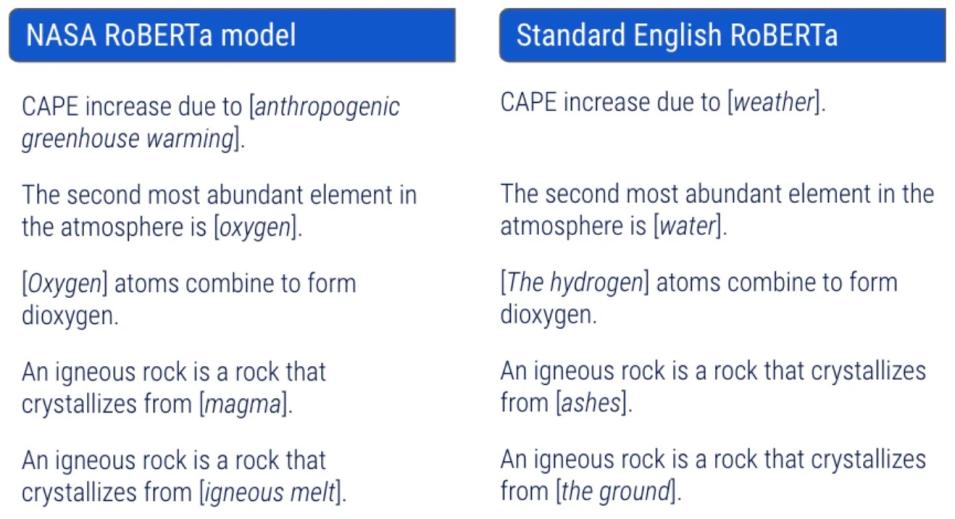

We plan to incorporate this new model into our existing operational processes. One application is to utilize this improved model in our science keyword tagging service. Using the Earth science-specific language model to build a keyword classifier will reduce inconsistencies and assist data stewards in objectively selecting optimal science keywords. Proper science keyword annotation of the data descriptions will, in turn, improve the search and discovery of the datasets.

Another application is to support the Satellite Needs Working Group (SNWG) assessment process. Every two years, all civil federal agencies send their Earth observation needs to NASA. In the past two cycles, we have received close to 120 needs descriptions. These needs have to be binned into different NASA science thematic areas and then sent to the program scientists and their teams leading the assessment process for each specific theme.

During the past two cycles, a single project principal was responsible for reading all the needs descriptions and assigning them to different themes. Using the language model has allowed us to develop a simple thematic classifier to substantially reduce the effort of the project principal and shorten the process of developing responses.

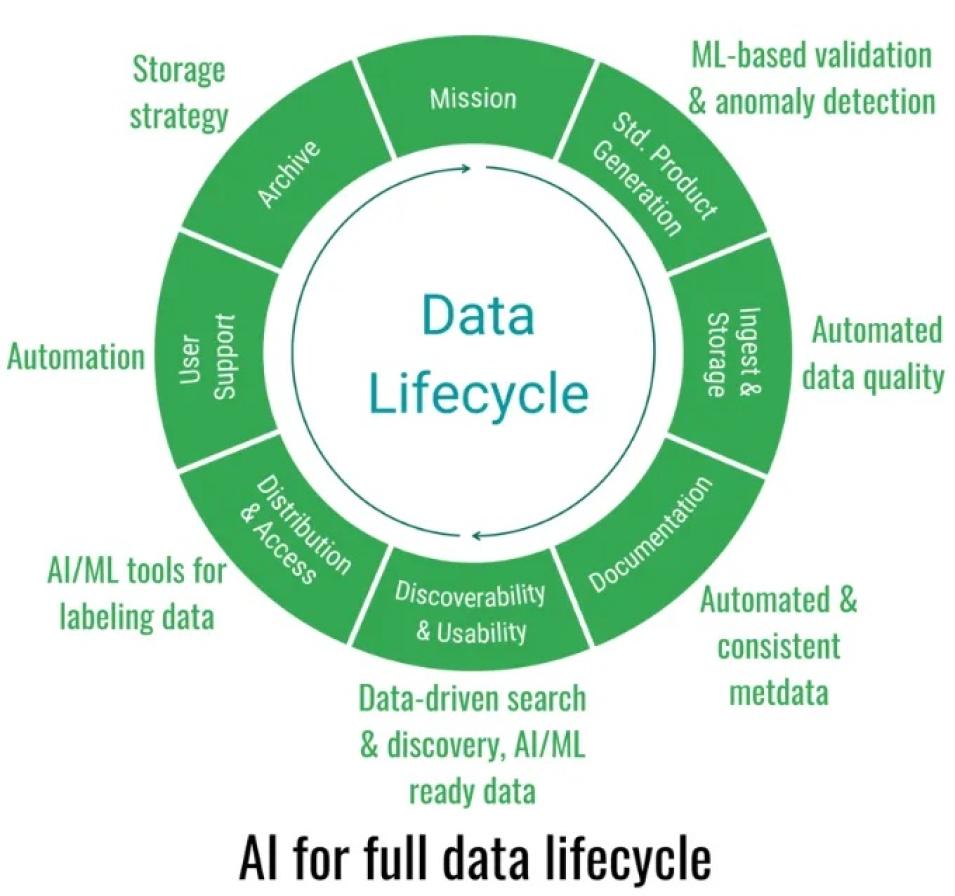

During our collaboration, the IBM Research team shared a paper on foundation models. Foundation models (FM) are AI models pretrained on comprehensive datasets using self supervised learning and can be used for many different downstream tasks. The datasets need to be sequential in nature, and that removes the need for having large labeled datasets. NASA’s archive holds large multidimensional time series data that could be used to create these foundation models.

The paper was intriguing because the FM approach would address two of three challenges that were reported in a 2020 workshop focused on advancing machine learning tools to NASA’s Earth Observation data. The first challenge is the existing bottleneck caused by the lack of availability and access to large training data. The second challenge is that existing ML models do not generalize well over space and time.

The question facing us is whether we should invest time and resources to build large foundation models for the data in our archives. Will it help our data users move away from the existing paradigm of building one machine learning model per application? Can we build FMs for specific disciplines within Earth science or should the FM be built for a subset of our archives, i.e., focused on our “Cadillac” datasets that already have a large number of the different downstream applications? Another fundamental question that needs to be investigated is whether these models capture underlying physical processes.

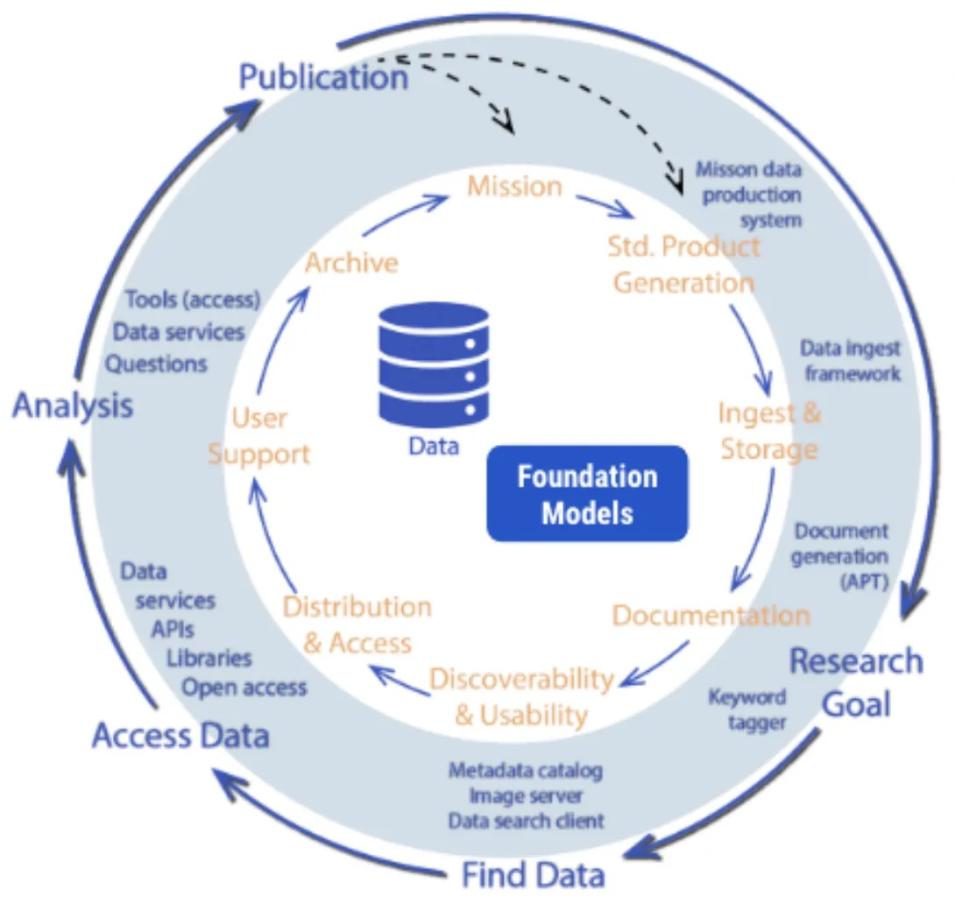

If foundation models do fulfill the promised potential, they can play a pivotal role in accelerating science and helping uncover new insights from our archives. We can envision a future state where in our building blocks, along with the data, we utilize different FMs to support both the data and research lifecycle.